Akamas AI-powered optimization platform supports performance engineers, SRE teams, and developers in keeping complex, real-world applications optimized both in real-time and with respect to any planned or what-if scenarios.

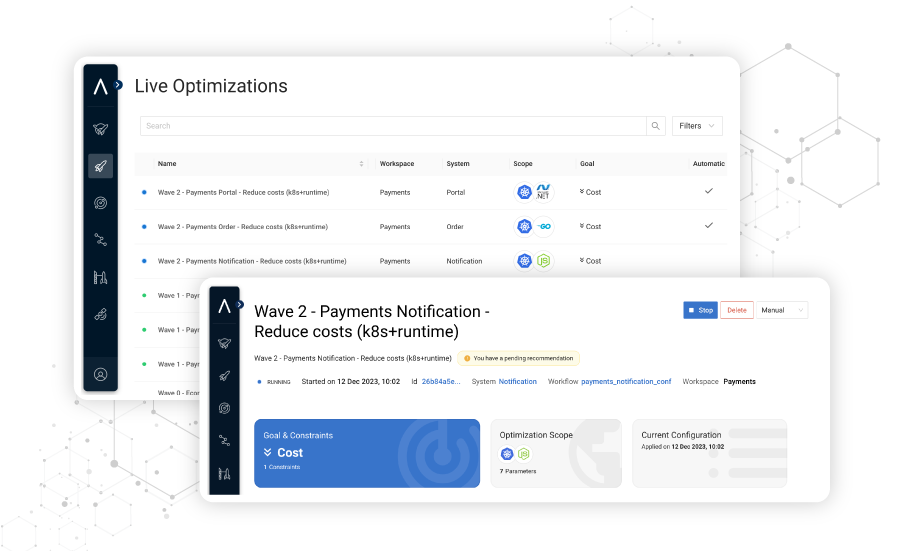

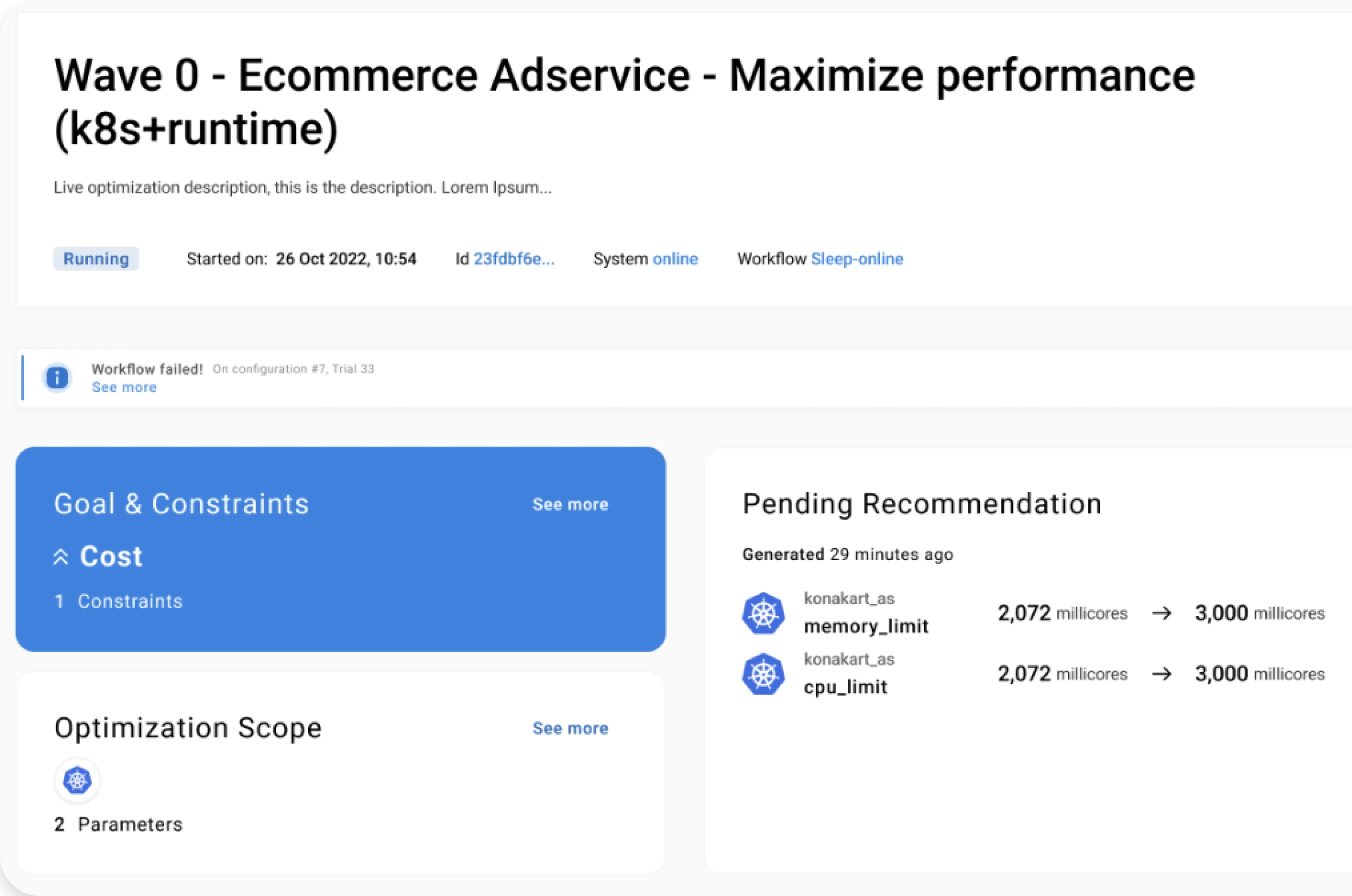

Akamas live optimizations are applied to production systems that need to be continuously optimized against dynamic varying workloads while running live.

This white paper describes Akamas live optimization technology and its key features, including full-stack, context-aware optimization based on live observations, safe recommendations with respect to defined SLOs and other customizable safety policies.

Key takeaways

Read this white paper also to learn more about how Akamas compares to solutions that:

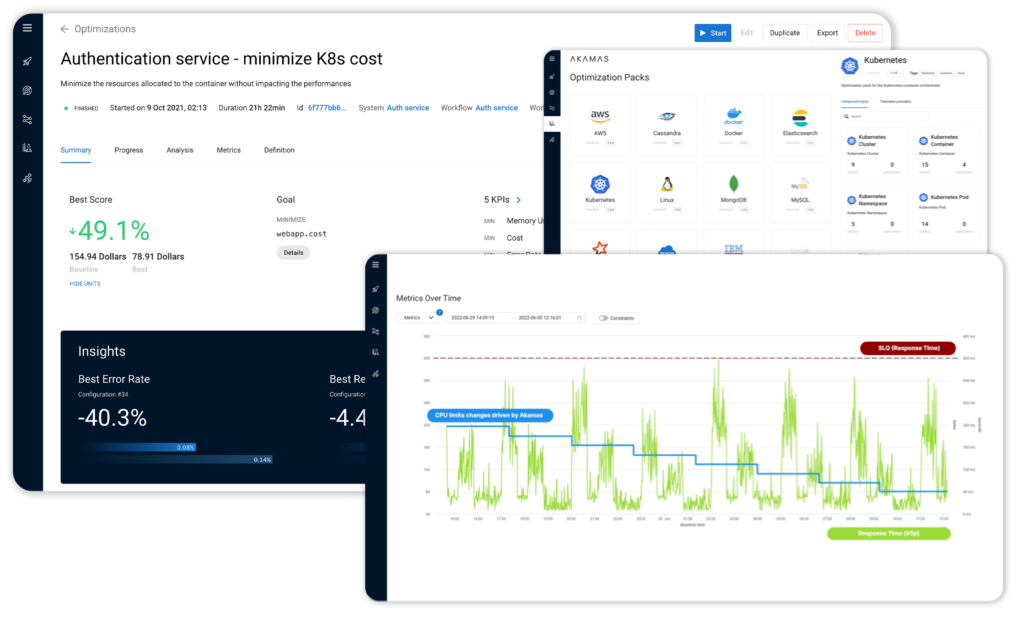

- Only provide simple indicators of how SLOs (e.g. response time) have been impacted after applied changes, but are unable to automatically identify optimal configurations and ensure defined SLOs are matched once applied.

- Rely on simplistic AI models operating at the infrastructure layer and do not take into account the interplay between application and infrastructure layers, thus adversely impacting the overall cost efficiency, resilience and end-to-end performance.

- Only focus on recommendations on the Kubernetes configuration without taking into account the dynamics of the application runtime (e.g. JVM), thus causing configuration mismatches, missed efficiency opportunities and serious business risks.

Curious about Akamas?

Get a demo.

Learn how it works in 20 minutes. No strings attached, no commitments.

Download

Related resources

See for yourself.

Experience the benefits of Akamas autonomous optimization.

No overselling, no strings attached, no commitments.

© 2024 Akamas S.p.A. All rights reserved. – Via Schiaffino, 11 – 20158 Milan, Italy – P.IVA / VAT: 10584850969