Frequently Asked Questions

Quick Navigation

All Questions

AI-powered autonomous optimization is a new approach to optimize applications by leveraging Artificial Intelligence (AI) and Machine Learning (ML) techniques to identify configurations of the IT stack that maximize performance, resilience and cost efficiency.

The complexity of today’s applications, whether monolithic or microservices, and software architectures, often spanning both datacenter and clouds, has grown beyond the reach of even the most skilled performance experts. Any manual tuning approach does not align well with DevOps practices, faster release cycles, and the need to support the business speed and to ensure the optimal tradeoff among performance, resilience and cost efficiency objectives is achieved at all times, while also matching SLOs (e.g. response time or transaction throughput). Only AI-powered autonomous optimization allows companies to support their business growth and minimize risks while matching user expectations and improving cost efficiency.

The Akamas AI-powered autonomous optimization platform has been designed to support performance engineers, SRE teams and developers in keeping complex, real-world applications optimized, both in real-time and with respect to any planned or what-if scenarios, both in test/pre-production and in production environments.

Akamas AI-powered optimization platform leverages patented machine learning (ML) techniques to identify optimal full-stack configurations for any applications and its underlying technologies with respect to any custom-defined goals and constraints (SLOs), without any human intervention and without requiring any code (or byte-code) changes.

Akamas has been implemented to effectively support all IT optimization initiatives, by providing valuable insights on the dynamics of complex applications and underlying IT stacks, by identifying how to improve their performance or resilience under failure scenarios, and by enabling better decision making on how to make them more cost effective and sustainable.

Competing solutions only provide support for real-time optimization thus lacking the ability to anticipate potential performance and resilience risks or identify potential cost efficiency benefits. This should be compared to the comprehensive support provided by Akamas AI-powered autonomous optimization platform for all optimization initiatives in both test/pre-production and production environments.

Moreover, competing solutions typically focus on the infrastructure level, have limited technology scope (e.g. Kubernetes or databases) or only support specific workloads, thus limiting their adoption and not being able to correctly capture the complex interplay among the different application layers in response to varying workloads. This should be compared to Akamas ability to manage any system and full-stack approach.

Finally, competing solutions typically use simplistic performance models based on past resource usage that do not work when tackling real-world optimization problems or fail to ensure that suggested configurations will not negatively impact defined SLOs. This is to be compared to Akamas patented AI optimization methods and goal-driven approach ensuring that SLOs and KPIs representing application performance are taken into account when looking for optimal configurations.

Akamas provides several quantifiable and unquantifiable benefits, such as higher service quality, business agility and customer satisfaction. While results may vary for each specific application and environment, Akamas routinely provides 60% cost reduction on average, improved service resilience and performance in the range of 30% to 70% (e.g. in terms of transaction throughput and response time, typically with lower fluctuations and peaks), and increased operational efficiency up to 5x (e.g. in terms of saved tuning hours).

Akamas is a technology-agnostic solution that can optimize virtually any application, whether batch or online, monolithic or microservices, on-premises, hybrid or cloud-based, relying on any technology, from any vendors and cloud providers.

The Akamas platform supports both test/pre-production and production environments: optimization studies (Offline Optimization mode) are used to optimize systems in test or pre-production environments, with respect to planned and what-if scenarios that cannot be directly run in production, while live optimizations (Live Optimization mode) are applied to systems to be optimized while running live in production environments with respect to varying workloads and observed system behaviors.

Akamas leverages similar AI methods for both live optimizations and optimization studies, the way these methods are applied is radically different. In test/pre-production environments, the Akamas AI has been designed to explore the configuration space by executing experiments, by also accepting potential failed experiments, so as to identify regions that do not correspond to viable configurations as quickly as possible, and rapidly converge to configurations that improve over the original configuration (baseline).

On the contrary, in production environments, the Akamas AI has been designed to rely on observations of configuration changes combined with the automatic detection of workload contexts, and to also apply several (customizable) safety policies, including gradual recommendation, smart constraint evaluation and outlier detection, in order to ensure that only safe configurations are recommended.

Akamas is full-stack as optimization can operate on any set of parameters chosen from the application and any other underlying layers of the IT stack, including middleware, database, operating system and cloud (including cloud instance options, managed services and serverless computing parameters). For example, when optimizing a Kubernetes microservices application, parameters at both runtime and container level can be included in Akamas optimizations. This is a critical capability (and key differentiator) as it makes it possible to avoid configuration mismatches resulting from operating only at the container layer, which in turn may result in missed efficiency opportunities and serious business risks, due to resource over-provisioning or (worse) under-provisioning and bad end-to-end application performance.

Akamas optimizations are goal-driven as Akamas AI can identify optimal configurations with respect to any custom-defined optimization objective and set of multiple constraints typically reflecting any SLOs in place (e.g. response time or throughput). An optimization goal can be defined as a formula on one or more KPIs and cost metrics and an optimization constraint can be defined as either referring to an absolute value or with respect to the current value (baseline). This goal-driven feature should be compared to other solutions that only provide indicators of how configurations did impact SLOs after changes, but do not take SLOs into account when looking for the optimal configuration with respect to the optimization goal.

Akamas not only provides the automatically identified configurations as a ranked list (by score) that are improving with respect to defined optimization goal (e.g. cost reduction), but also provides optimization insights on whether there are sub-optimal configurations (e.g. lower cost reduction) that would improve other relevant KPIs (e.g. throughput or response time), and that, as such, would represent a better configuration to put in place with respect to the optimal one. Akamas optimization insights are automatically generated for any user-selected KPI, in addition to KPIs from the defined goals and constraints.

Akamas recommended configurations can be either applied in a fully autonomously mode or only after human approval and review, thus possibly also modifying them before being applied.

Akamas optimization is based on identifying configurations of parameters at both application and IT stack level (including cloud instance options) that do not require any code changes. Code-level optimization may represent a complementary approach that may provide significant benefits, while typically requiring substantial development effort and not being always easily applied in all circumstances.

On the contrary, Akamas optimizations can be obtained in a fraction of the time (and with much less effort) and typically provide immediate and significant improvements. Finally, wrong configurations would prevent achieving the desired performance, resilience and cost efficiency, even when the code is already optimized.

Akamas does not require any agents to be installed. Akamas can smoothly integrate with any ecosystem. In particular, Akamas can leverage any configuration management tool or interface to change configurations, can leverage any telemetry provider, monitoring tool or data source to collect KPIs and cost data, and any load testing tools and scripts already in place. Akamas provides dozens of out-the-box integrations for the most common management tools and interfaces and also allows custom integrations to be defined (no coding required).

Akamas integration with load testing tools is only required to support Offline Optimization. These optimization studies are typically being executed in test/pre-production environments where the workload is being generated against the target system. Akamas provides out-of-the-box integrations with most common COTS and OOS tools. Akamas workflows make it easy to fully automate complex sequences of steps to start, initialize, feed data to system components and execute any load testing scripts. Finally, Akamas features the automatic stability windowing detection capabilities that enable automated detection of stable configurations without requiring any manual analysis.

Akamas makes easy to apply changes to any target systems, by leveraging any configuration management tools (e.g. Ansible and Terraform) or repositories (e.g. Git) and available native interfaces (e.g. Kubernetes APIs) and its native operators for remote command execution (e.g. SSH) and write-to-file (in any format). Moreover, thanks to Akamas workflows, the process of changing configurations can be fully automated.

Akamas has been designed and implemented to support DevOps practices. Thanks to its Infrastructure-as-Code (IaC) approach and comprehensive set of APIs, Akamas optimization steps can be easily integrated into any Continuous Integration and Continuous Deployment (CI/CD) pipelines to implement a continuous optimization process. Akamas provides out-the-box integrations with most common COTS and OOS value stream delivery solutions.

Akamas optimization is based on identifying configurations of parameters at both application and IT stack levels without requiring any code, byte-code or infrastructure changes. This should be compared with low-level optimization approaches that may obfuscate the expected application behavior and whose optimizations are less difficult to understand and explain.

Akamas is both the name of an independent software vendor and of the solution it develops and commercializes. Akamas is part of Movìri and builds upon more than 20 years of experience in performance tuning, capacity planning and optimization.

Akamas was established in 2019 with the mission to bring AI-powered autonomous optimization to performance engineers, SREs and developers and make it possible for their companies to deliver unprecedented levels of service performance, resilience and cost efficiency.

Akamas counts AWS, Dynatrace, Google Cloud, Gremlin, Micro Focus, Redhat, Splunk and Tricentis among its technology partners. Headquartered in Milan, Akamas has offices in Boston, Los Angeles, and Singapore.

Akamas is an independent software vendor. While at Akamas we also offer consulting around our own products and services, we are laser-focused on delivering the promise of AI-powered autonomous optimization.

To learn more about Akamas please read our case studies, white papers, blog entries and other resources at https://www.akamas.io/resources/. The Akamas documentation can be found at https://docs.akamas.io/akamas-docs

You can also enroll for a 15 days Free Trial at https://www.akamas.io/free-trial/ or book a demo at https://www.akamas.io/demo/ . You can also contact us at info@akamas.io.

To contact Akamas please write by email to info@akamas.io or contact one of our offices at https://www.akamas.io/contact/.

Fundamentals

AI-powered autonomous optimization is a new approach to optimize applications by leveraging Artificial Intelligence (AI) and Machine Learning (ML) techniques to identify configurations of the IT stack that maximize performance, resilience and cost efficiency.

The complexity of today’s applications, whether monolithic or microservices, and software architectures, often spanning both datacenter and clouds, has grown beyond the reach of even the most skilled performance experts. Any manual tuning approach does not align well with DevOps practices, faster release cycles, and the need to support the business speed and to ensure the optimal tradeoff among performance, resilience and cost efficiency objectives is achieved at all times, while also matching SLOs (e.g. response time or transaction throughput). Only AI-powered autonomous optimization allows companies to support their business growth and minimize risks while matching user expectations and improving cost efficiency.

The Akamas AI-powered autonomous optimization platform has been designed to support performance engineers, SRE teams and developers in keeping complex, real-world applications optimized, both in real-time and with respect to any planned or what-if scenarios, both in test/pre-production and in production environments.

Akamas AI-powered optimization platform leverages patented machine learning (ML) techniques to identify optimal full-stack configurations for any applications and its underlying technologies with respect to any custom-defined goals and constraints (SLOs), without any human intervention and without requiring any code (or byte-code) changes.

Akamas has been implemented to effectively support all IT optimization initiatives, by providing valuable insights on the dynamics of complex applications and underlying IT stacks, by identifying how to improve their performance or resilience under failure scenarios, and by enabling better decision making on how to make them more cost effective and sustainable.

Competing solutions only provide support for real-time optimization thus lacking the ability to anticipate potential performance and resilience risks or identify potential cost efficiency benefits. This should be compared to the comprehensive support provided by Akamas AI-powered autonomous optimization platform for all optimization initiatives in both test/pre-production and production environments.

Moreover, competing solutions typically focus on the infrastructure level, have limited technology scope (e.g. Kubernetes or databases) or only support specific workloads, thus limiting their adoption and not being able to correctly capture the complex interplay among the different application layers in response to varying workloads. This should be compared to Akamas ability to manage any system and full-stack approach.

Finally, competing solutions typically use simplistic performance models based on past resource usage that do not work when tackling real-world optimization problems or fail to ensure that suggested configurations will not negatively impact defined SLOs. This is to be compared to Akamas patented AI optimization methods and goal-driven approach ensuring that SLOs and KPIs representing application performance are taken into account when looking for optimal configurations.

Akamas provides several quantifiable and unquantifiable benefits, such as higher service quality, business agility and customer satisfaction. While results may vary for each specific application and environment, Akamas routinely provides 60% cost reduction on average, improved service resilience and performance in the range of 30% to 70% (e.g. in terms of transaction throughput and response time, typically with lower fluctuations and peaks), and increased operational efficiency up to 5x (e.g. in terms of saved tuning hours).

Key Capabilities

Akamas is a technology-agnostic solution that can optimize virtually any application, whether batch or online, monolithic or microservices, on-premises, hybrid or cloud-based, relying on any technology, from any vendors and cloud providers.

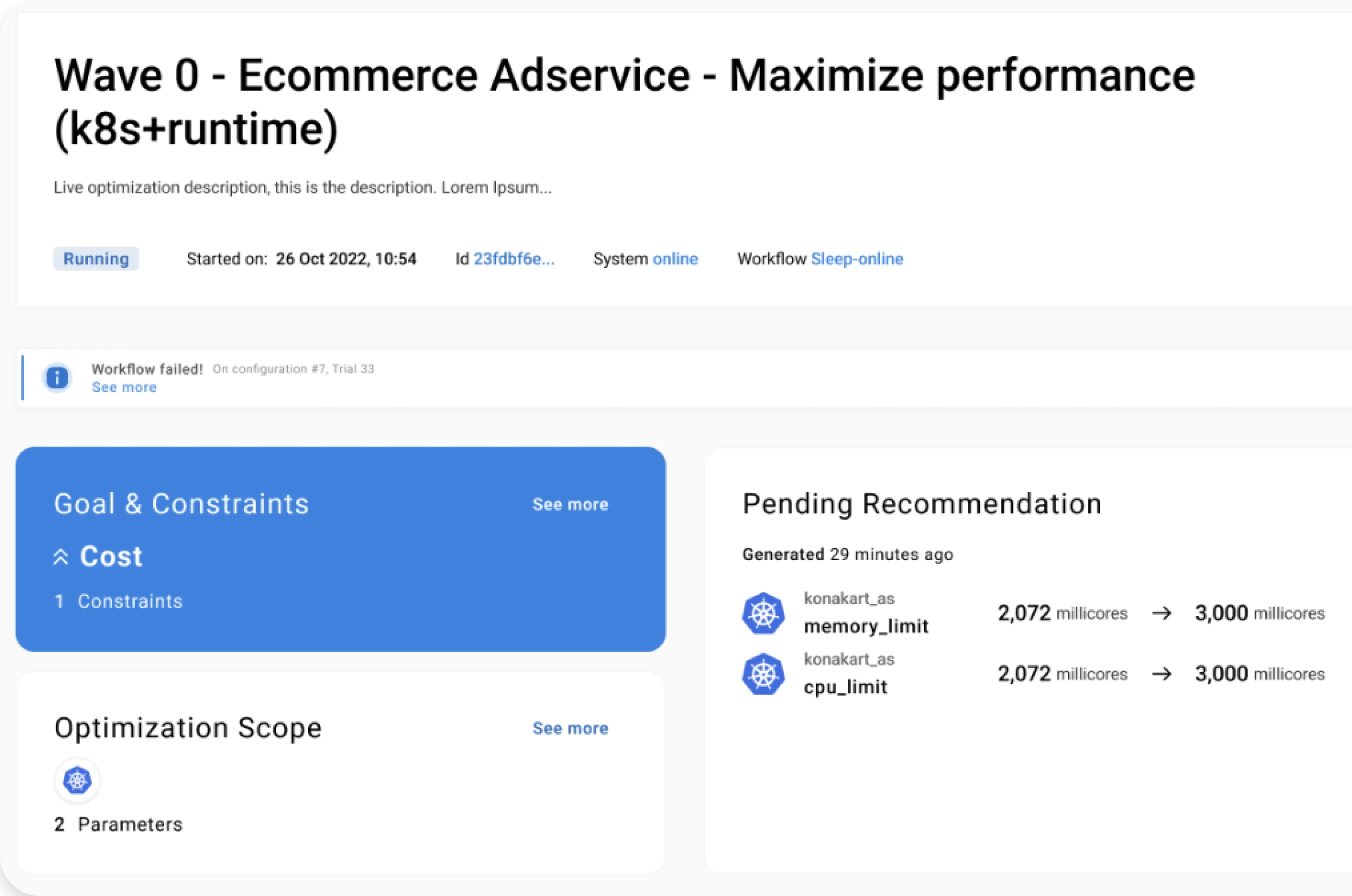

The Akamas platform supports both test/pre-production and production environments: optimization studies (Offline Optimization mode) are used to optimize systems in test or pre-production environments, with respect to planned and what-if scenarios that cannot be directly run in production, while live optimizations (Live Optimization mode) are applied to systems to be optimized while running live in production environments with respect to varying workloads and observed system behaviors.

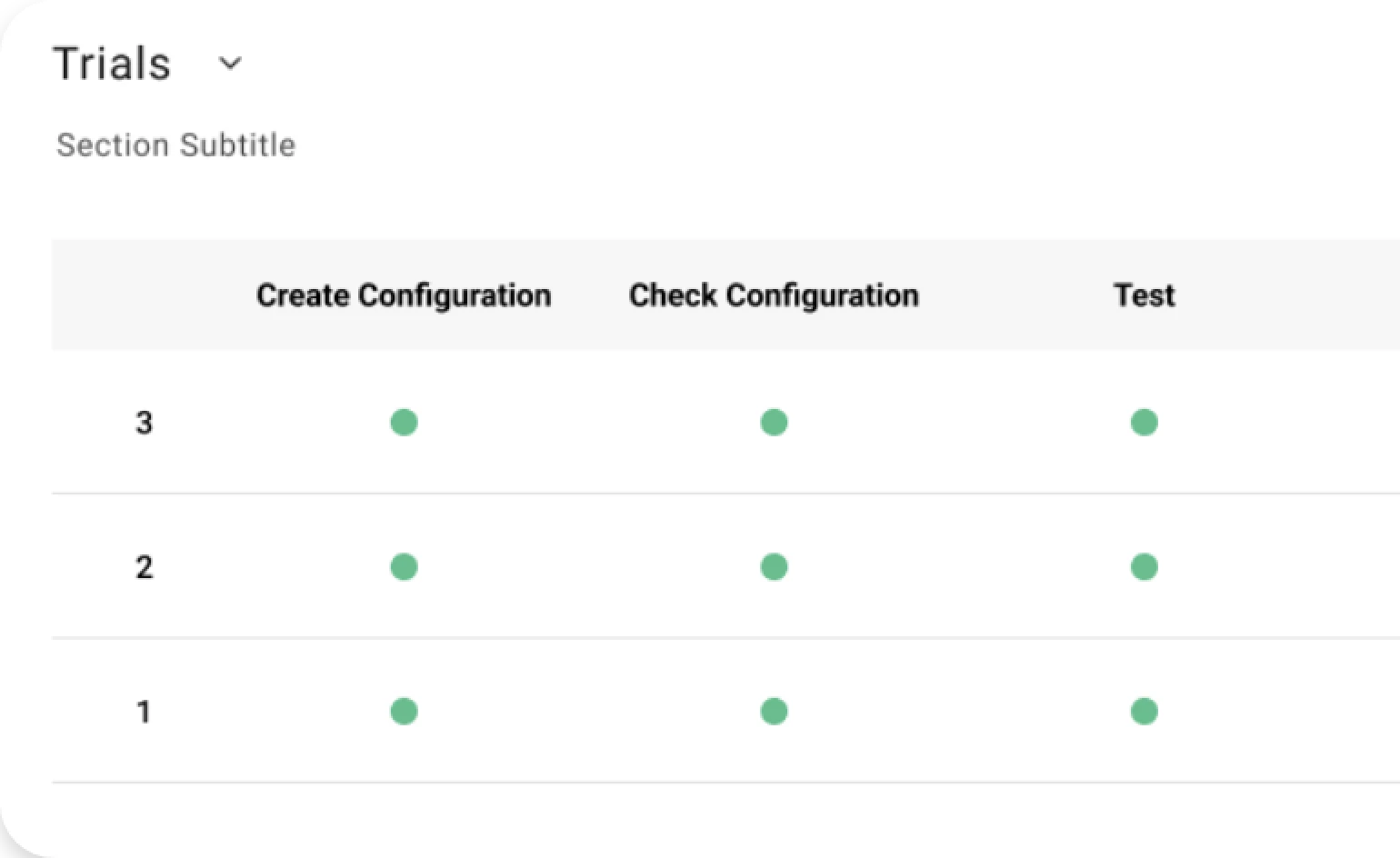

Akamas leverages similar AI methods for both live optimizations and optimization studies, the way these methods are applied is radically different. In test/pre-production environments, the Akamas AI has been designed to explore the configuration space by executing experiments, by also accepting potential failed experiments, so as to identify regions that do not correspond to viable configurations as quickly as possible, and rapidly converge to configurations that improve over the original configuration (baseline).

On the contrary, in production environments, the Akamas AI has been designed to rely on observations of configuration changes combined with the automatic detection of workload contexts, and to also apply several (customizable) safety policies, including gradual recommendation, smart constraint evaluation and outlier detection, in order to ensure that only safe configurations are recommended.

Akamas is full-stack as optimization can operate on any set of parameters chosen from the application and any other underlying layers of the IT stack, including middleware, database, operating system and cloud (including cloud instance options, managed services and serverless computing parameters). For example, when optimizing a Kubernetes microservices application, parameters at both runtime and container level can be included in Akamas optimizations. This is a critical capability (and key differentiator) as it makes it possible to avoid configuration mismatches resulting from operating only at the container layer, which in turn may result in missed efficiency opportunities and serious business risks, due to resource over-provisioning or (worse) under-provisioning and bad end-to-end application performance.

Akamas optimizations are goal-driven as Akamas AI can identify optimal configurations with respect to any custom-defined optimization objective and set of multiple constraints typically reflecting any SLOs in place (e.g. response time or throughput). An optimization goal can be defined as a formula on one or more KPIs and cost metrics and an optimization constraint can be defined as either referring to an absolute value or with respect to the current value (baseline). This goal-driven feature should be compared to other solutions that only provide indicators of how configurations did impact SLOs after changes, but do not take SLOs into account when looking for the optimal configuration with respect to the optimization goal.

Akamas not only provides the automatically identified configurations as a ranked list (by score) that are improving with respect to defined optimization goal (e.g. cost reduction), but also provides optimization insights on whether there are sub-optimal configurations (e.g. lower cost reduction) that would improve other relevant KPIs (e.g. throughput or response time), and that, as such, would represent a better configuration to put in place with respect to the optimal one. Akamas optimization insights are automatically generated for any user-selected KPI, in addition to KPIs from the defined goals and constraints.

Akamas recommended configurations can be either applied in a fully autonomously mode or only after human approval and review, thus possibly also modifying them before being applied.

Akamas optimization is based on identifying configurations of parameters at both application and IT stack level (including cloud instance options) that do not require any code changes. Code-level optimization may represent a complementary approach that may provide significant benefits, while typically requiring substantial development effort and not being always easily applied in all circumstances.

On the contrary, Akamas optimizations can be obtained in a fraction of the time (and with much less effort) and typically provide immediate and significant improvements. Finally, wrong configurations would prevent achieving the desired performance, resilience and cost efficiency, even when the code is already optimized.

Prerequisites & Integrations

Akamas does not require any agents to be installed. Akamas can smoothly integrate with any ecosystem. In particular, Akamas can leverage any configuration management tool or interface to change configurations, can leverage any telemetry provider, monitoring tool or data source to collect KPIs and cost data, and any load testing tools and scripts already in place. Akamas provides dozens of out-the-box integrations for the most common management tools and interfaces and also allows custom integrations to be defined (no coding required).

Akamas integration with load testing tools is only required to support Offline Optimization. These optimization studies are typically being executed in test/pre-production environments where the workload is being generated against the target system. Akamas provides out-of-the-box integrations with most common COTS and OOS tools. Akamas workflows make it easy to fully automate complex sequences of steps to start, initialize, feed data to system components and execute any load testing scripts. Finally, Akamas features the automatic stability windowing detection capabilities that enable automated detection of stable configurations without requiring any manual analysis.

Akamas makes easy to apply changes to any target systems, by leveraging any configuration management tools (e.g. Ansible and Terraform) or repositories (e.g. Git) and available native interfaces (e.g. Kubernetes APIs) and its native operators for remote command execution (e.g. SSH) and write-to-file (in any format). Moreover, thanks to Akamas workflows, the process of changing configurations can be fully automated.

Akamas has been designed and implemented to support DevOps practices. Thanks to its Infrastructure-as-Code (IaC) approach and comprehensive set of APIs, Akamas optimization steps can be easily integrated into any Continuous Integration and Continuous Deployment (CI/CD) pipelines to implement a continuous optimization process. Akamas provides out-the-box integrations with most common COTS and OOS value stream delivery solutions.

Akamas optimization is based on identifying configurations of parameters at both application and IT stack levels without requiring any code, byte-code or infrastructure changes. This should be compared with low-level optimization approaches that may obfuscate the expected application behavior and whose optimizations are less difficult to understand and explain.

See for yourself.

Experience the benefits of Akamas autonomous optimization.

No overselling, no strings attached, no commitments.

© 2024 Akamas S.p.A. All rights reserved. – Via Schiaffino, 11 – 20158 Milan, Italy – P.IVA / VAT: 10584850969