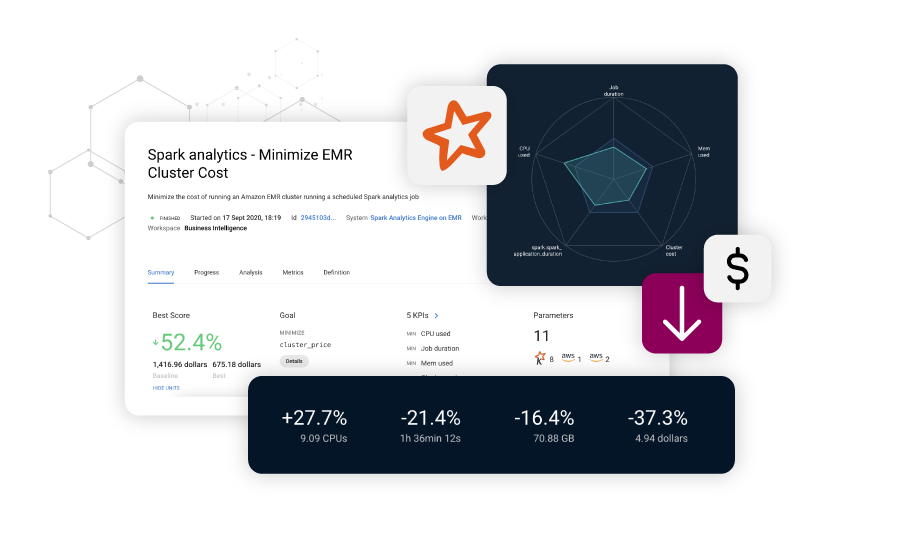

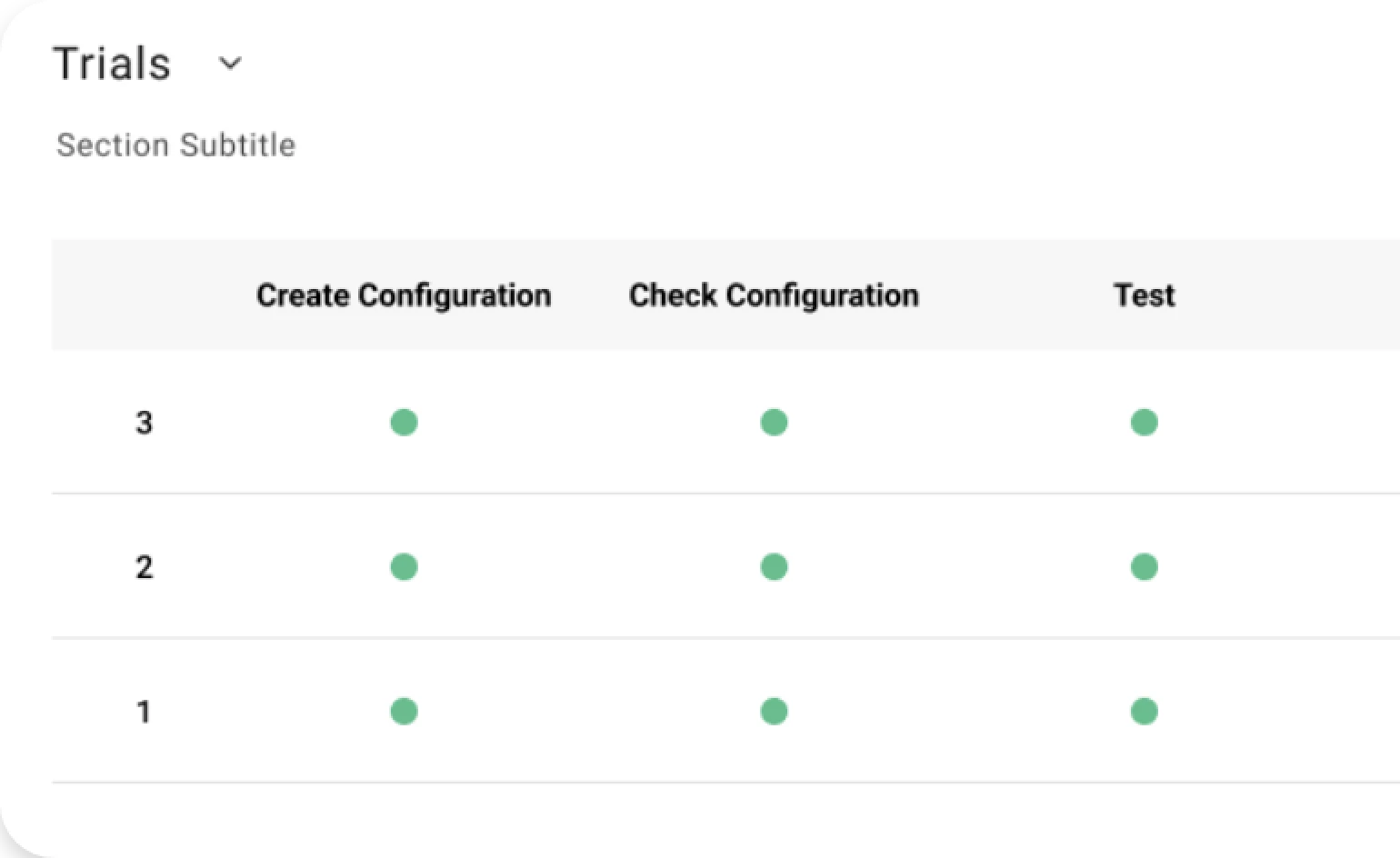

Tuning Spark jobs and rightsizing their underlying infrastructure to make Big Data applications run faster and minimize costs is more art than science.

Whether jobs run on premise or on cloud, there are thousands of possible configurations while the data-driven nature of big data architectures makes their performance (and reliability) highly sensitive to both the specific application and datasets.

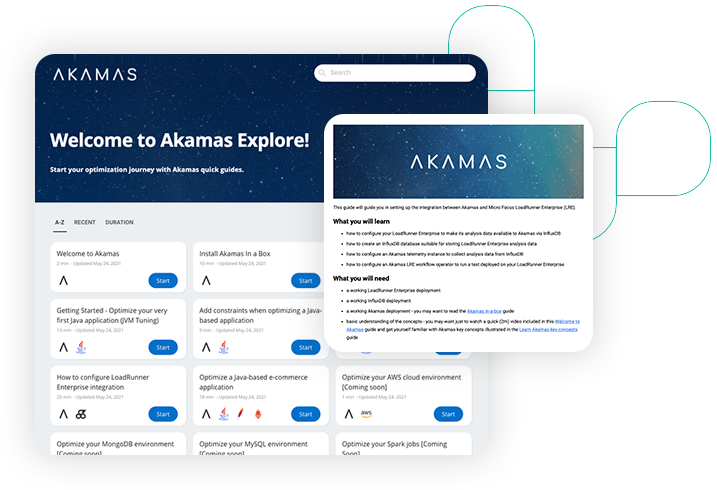

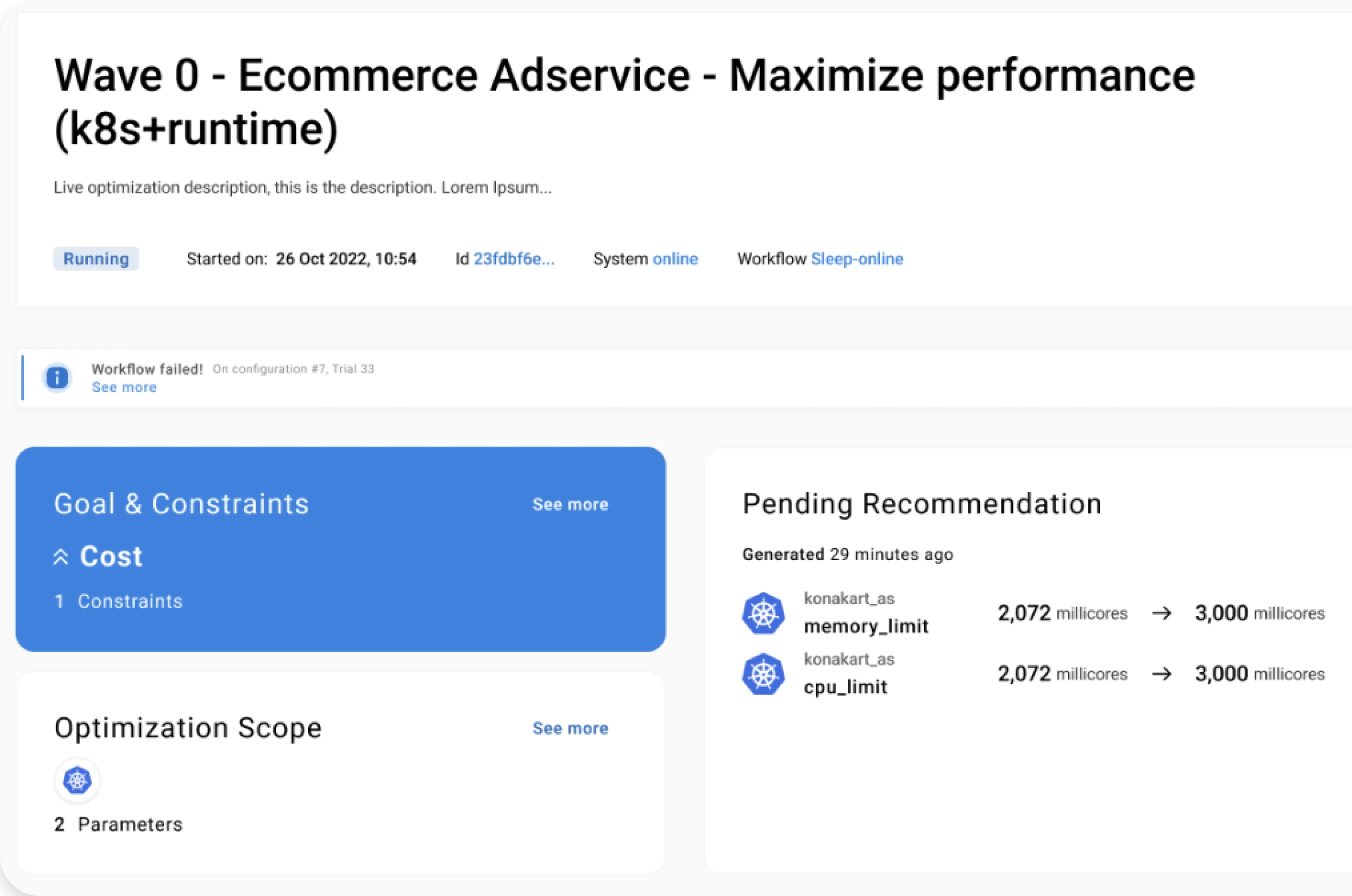

Akamas leverages AI to provides insights about potential tradeoffs and recommend configurations that minimize resource usage and maximize performance, thus reducing costs while meeting SLOs.