Blog Post

Insights from Kubecon 2023 – K8s for GenAI, Efficiency, and Sustainability

Kubernetes OptimizationLast week, I attended Kubecon 2023 in Chicago, a conference I prioritize as it’s a unique opportunity to learn what the cloud-native community is up to and where it’s headed.

I came home with some new insights about the direction of Kubernetes (K8s) projects and initiatives led by prominent K8s users. I’ll share my key takeaways, particularly focusing on the areas I find crucial and where I saw the most exciting news: cost efficiency, performance, and reliability within the K8s ecosystem. I hope they will be useful for others as well.

Let’s dive in!

GenAI: The next big thing in K8s world

I have to admit, I was surprised to see the extent to which Generative AI took center stage during the keynotes! This underscores once again how AI is revolutionizing every sector of the computing industry.

But what does GenAI have to do with K8s? As Priyanka Sharma, CNCF Executive Director, put it, not many know that K8s is the infrastructure powering the GenAI rocket!

During her presentation, Priyanka showcased a chatbot powered by a Large Language Model (LLM) right from her laptop. However, there was a minor hiccup when the database pod encountered issues… I suspect the dreaded OOM kill or CPU throttling affected the KubeCon keynote 🙂

The keynotes also delved into the complexities of running AI workloads on K8s.

Representatives from NVIDIA discussed the difficulties in rightsizing GPUs and managing AI workload performance, a task that’s markedly different from handling traditional web applications, the initial focus of K8s. Interestingly, a new Dynamic Resource Allocation model is in the works that will allow better management of GPUs, including sharing them among multiple pods.

Google’s Tim Hockin‘s keynote, where he reflected on the future of Kubernetes, was insightful. As a co-founder of K8s, he emphasized the challenge that AI workloads will pose for the platform. He predicted that these workloads will demand substantial resources, potentially requiring thousands of cores! Efficiently managing these resources is crucial, not just for cost-effectiveness but also for sustainability.

Looks like efficiency and performance tuning are going to be critical for Kubernetes’ future success. We’re going to see more configuration options to fine-tune AI workloads resource allocation, which is great, but it also means K8s users will have a few more “knobs” to figure out.

Interesting times ahead if you are interested in K8s optimization!

Sustainability!

Sustainability really took the spotlight in the keynotes and sessions. Why focus on this? Well, data centers gobble up about 2% of global power, and we’re nudging close to that critical 1.5 °C global warming limit. It’s a wake-up call for the IT world to step up.

Before we can make a dent in this, we need the right metrics to pinpoint where we’re using the most carbon. And that’s a bit of a tricky part in sustainability – getting a clear picture of our carbon footprint in software and infrastructure isn’t straightforward yet.

The Software Carbon Intensity specification, developed by the Green Software Foundation, enables the calculation of software’s carbon intensity. Additionally, the Carbon Aware SDK helps identify the most sustainable energy sources, complemented by Kepler, an open-source project for energy monitoring through Prometheus and eBPF.

Once you have visibility, you can start optimizing. An example? By rightsizing your K8s pods, you can cut CO2 emissions by 45%! Or even by turning them off. That’s the goal of kube-green, a project developed by our friend Davide Bianchi of Mia-Platform. This project allows the shutdown of K8s resources during inactive periods.

Proper workload scheduling can also be effective. The carbon-aware Keda operator, for instance, enables you to intelligently scale workloads based on the carbon emissions of different cloud regions.

Overall, it was encouraging to see sustainability as a focal point and the progress being made. In IT, where energy waste is all too common, we all need to play a part in reducing emissions. Although interest in actual carbon reduction is still growing, the good news is that cost optimization, a more common concern, often leads to carbon savings as well.

K8s cost optimization roll call

This year, cost optimization emerged as a significant focus. There’s an increasing recognition of K8s’s potential for inefficiency, but with a strong optimization strategy, substantial cost and carbon savings can be achieved.

15,000 Minecraft players vs. one K8s cluster: Justin Head demonstrated that running K8s on bare metal led to 65% cost savings. Using K8s Cluster API and other open-source tools, they achieved cloud-native benefits on a bare metal setup. A clever approach was the tuning of K8s scheduler policies for more efficient pod placement (NodeResourcesFit.MostAllocated), see K8s scheduler docs for more info. Slide deck here.

Sustainable scaling of Kubernetes workloads: Vinay Kulkarni from eBay highlighted the challenge of accurately defining pod resource requirements. Efficiency is crucial, but there’s a risk of under-resourcing pods, affecting performance and availability. They are exploring in-place pod resizing to adjust settings without restarts and using AI for automated pod sizing, aiming to optimize resource use without affecting Service Level Objectives (SLOs). Slide deck here.

Kubernetes on a budget: How to get pay-per-use Right: Karim Lakhani from Intuit discussed their experience in cost-optimizing a high-scale API gateway across more than 30 clusters. They focused on fine-tuning Horizontal Pod Autoscaler (HPA) configurations and cluster autoscaler settings, adjusting scaling metrics and pod resource limits manually. Slide deck here.

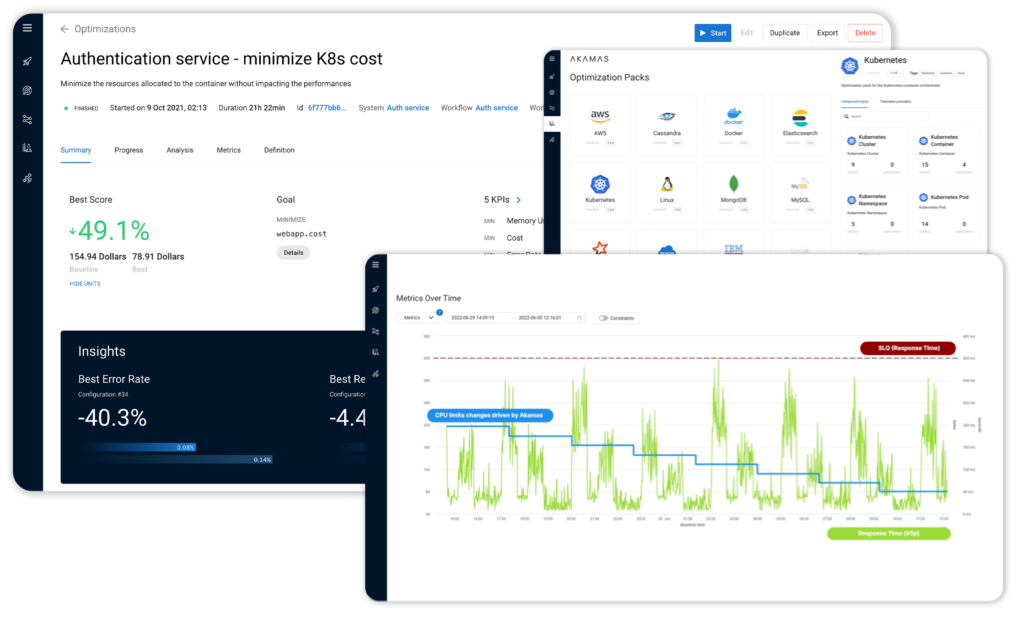

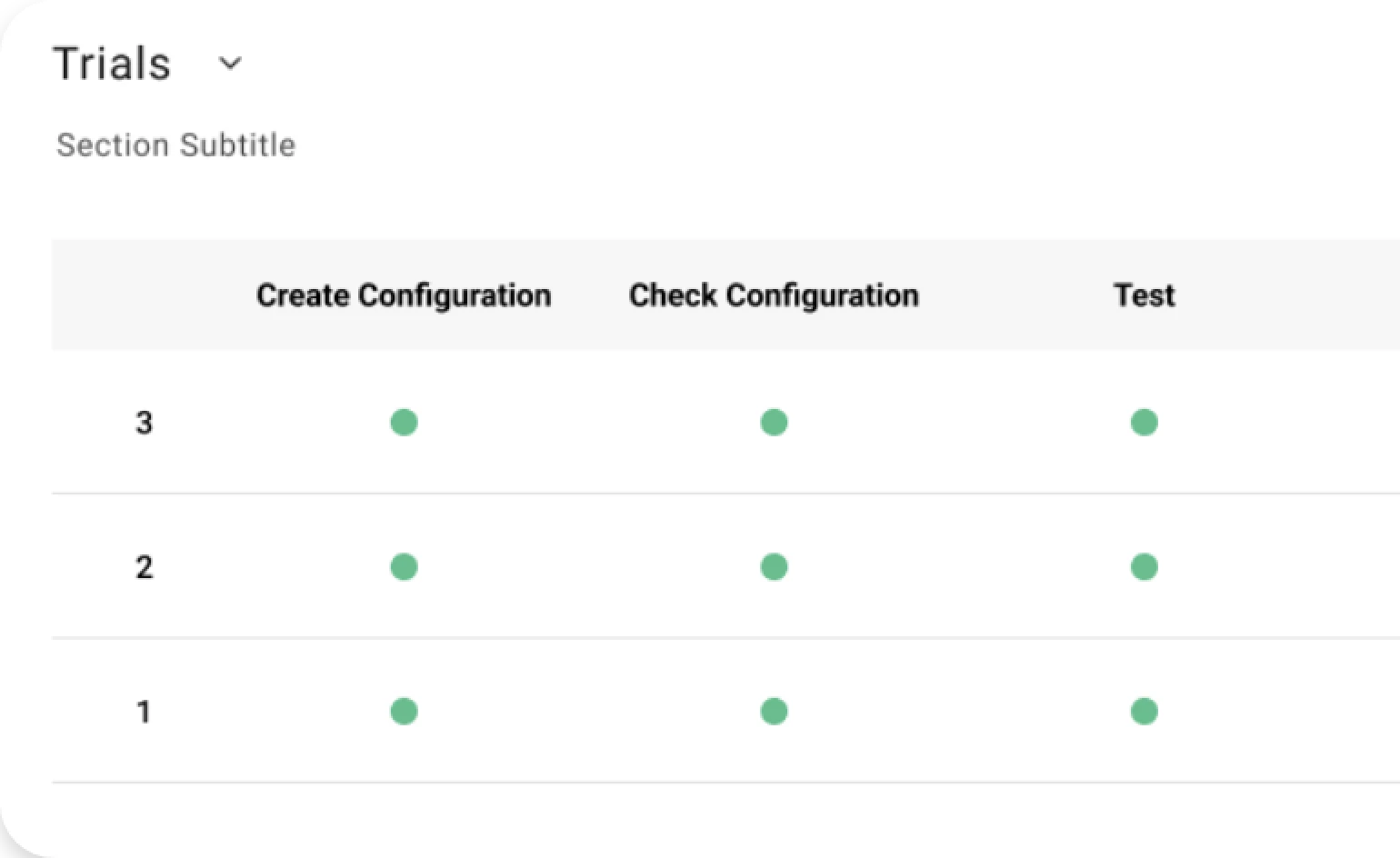

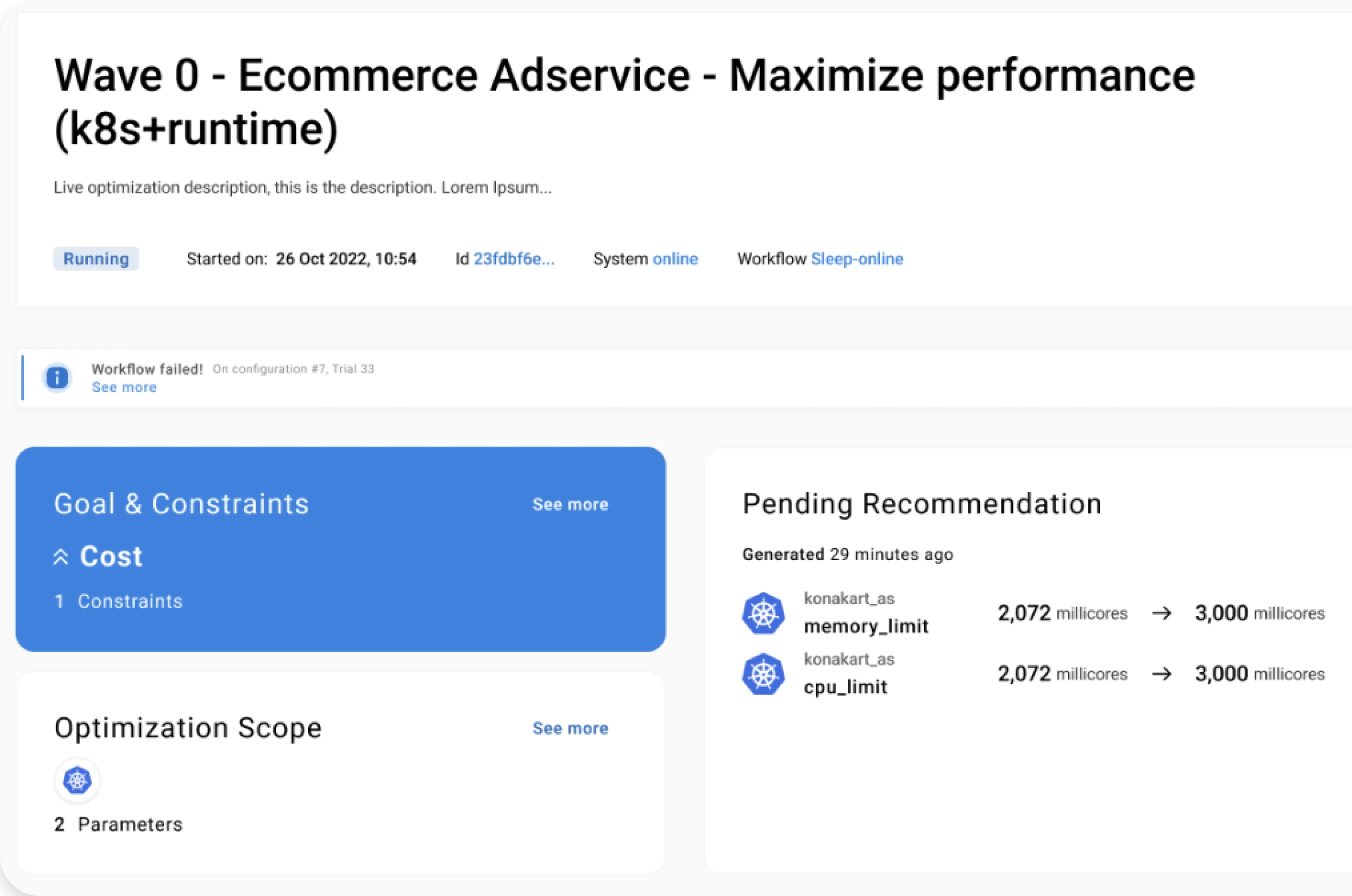

K8s pod autoscaling with application-aware AI: At Akamas, hosted by Dynatrace, I presented a new K8s optimization case study. We focused on configuring HPA and pod resources to minimize costs while ensuring application reliability. Addressing the HPA challenges shared by the Intuit team, our approach simplifies this process, facilitating scalable and cost-effective applications. Slide deck here.

In summary, it’s encouraging to see K8s teams increasingly aware of the vast optimization potential within the platform. While K8s offers excellent capabilities, realizing its full efficiency and scalability benefits requires proactive tuning. It’s also exciting to observe the growing trend of using AI for K8s optimization. At Akamas, we’ve long advocated for AI-driven optimization and have developed an enterprise platform that addresses both cost and reliability challenges in K8s.

Curious about Akamas?

Get a demo.

Learn how it works in 20 minutes. No strings attached, no commitments.

Reading Time:

6 minutes

Author:

Stefano Doni

Co-founder & CTO

Stay up to date!

Related resources

See for yourself.

Experience the benefits of Akamas autonomous optimization.

No overselling, no strings attached, no commitments.

© 2024 Akamas S.p.A. All rights reserved. – Via Schiaffino, 11 – 20158 Milan, Italy – P.IVA / VAT: 10584850969