Blog Post

Is AI-powered autonomous optimization the answer to the Kubernetes dilemma?

Kubernetes OptimizationNotice: An abridged version of this interview has been published as Akamas contributed content by TFIR for the KubeCon+CloudNativeCon NA 2022 in Detroit, October 24-28

Stefano Doni, CTO at Kubernetes optimization company Akamas, and long-time CMG contributor and Best Paper award winner, is a recognized expert in performance engineering challenges and hot topics like microservices and container optimization.

We asked Stefano to help us better understand an emerging discipline, which Stefano and his team refer to as AI-powered Autonomous Optimization, particularly in the context of the growing adoption of Kubernetes for microservices applications.

Hi Stefano, can you please help us to understand what “AI-powered Autonomous Optimization” is all about?

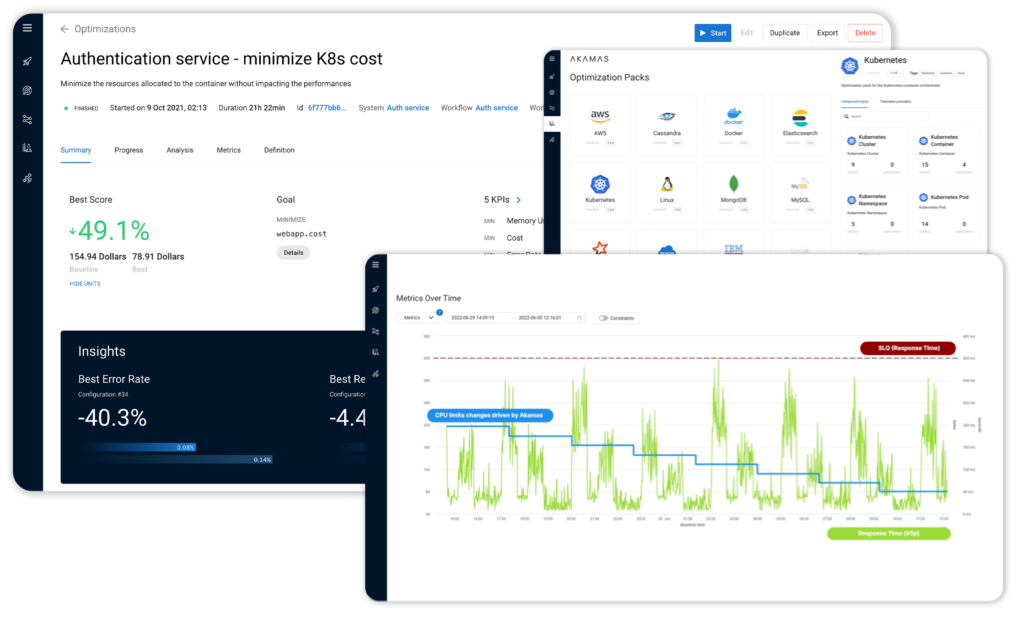

Simply put, “AI-powered Autonomous Optimization” is a new practice that leverages AI techniques to support performance engineers, developers and SREs in optimizing applications and maximizing their availability, performance, resilience and cost efficiency.

While this is the term we use at Akamas, this is a new discipline and there isn’t yet a standardized term to describe it. Gartner, for example, has recently introduced the term AI-Augmented Software Engineering (AIASE) to refer to the broader context of how AI can help improve different aspects of software engineering.

Why is this new discipline starting to emerge? What is the problem you are trying to address?

Great question! The question of “why now” is very relevant in this case.

Indeed, neither performance tuning nor infrastructure optimization are new disciplines, nor is the use of AI techniques – think, for example, about AIOps.

However, tuning today’s applications and their complex, multi-layered, hybrid infrastructure, was already an impossible challenge even for the best experts. I can talk from experience, as a performance engineer and capacity planning expert for almost 15 years. In fact, this was the reason I co-founded my company, to use the power of AI to help performance engineers like myself.

We have proven that only by leveraging AI techniques it becomes possible to tune the hundreds of available parameters (e.g. JVM GC or EC2 instance types) and identify, among the thousands of possible configurations, those that deliver optimal performance, resilience and cost tradeoffs. Our AI allows you to do that, while also matching any SLOs, such as response time or transaction throughput, and within a timeframe that is compatible with today’s release cycles.

Now, with the adoption of cloud-native applications, and the need to optimize Kubernetes applications in real-time, this challenge has reached yet another level. This is not simply a matter of delivering better performances or reducing costs, but, with Kubernetes, there are also very real performance and resilience risks.

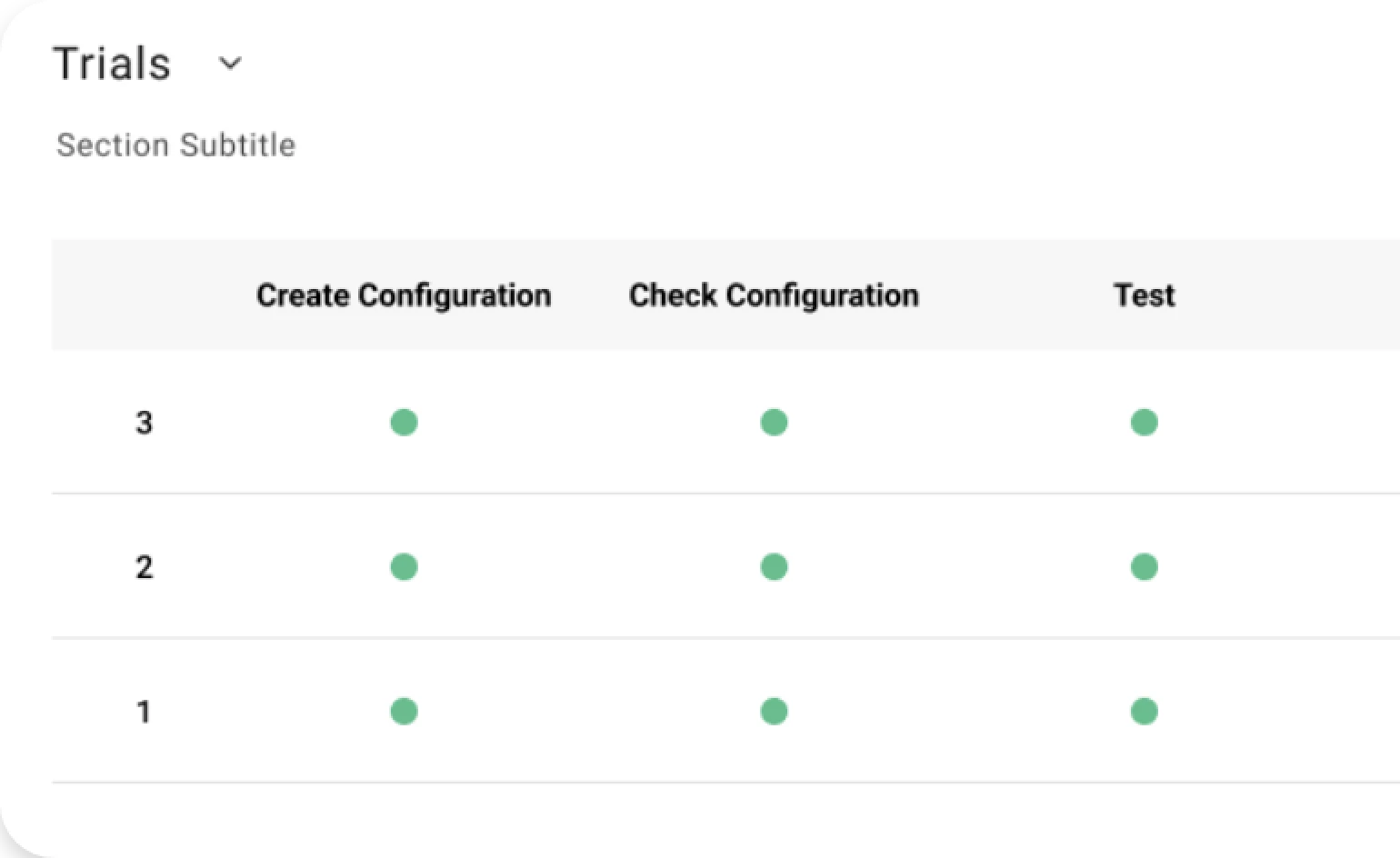

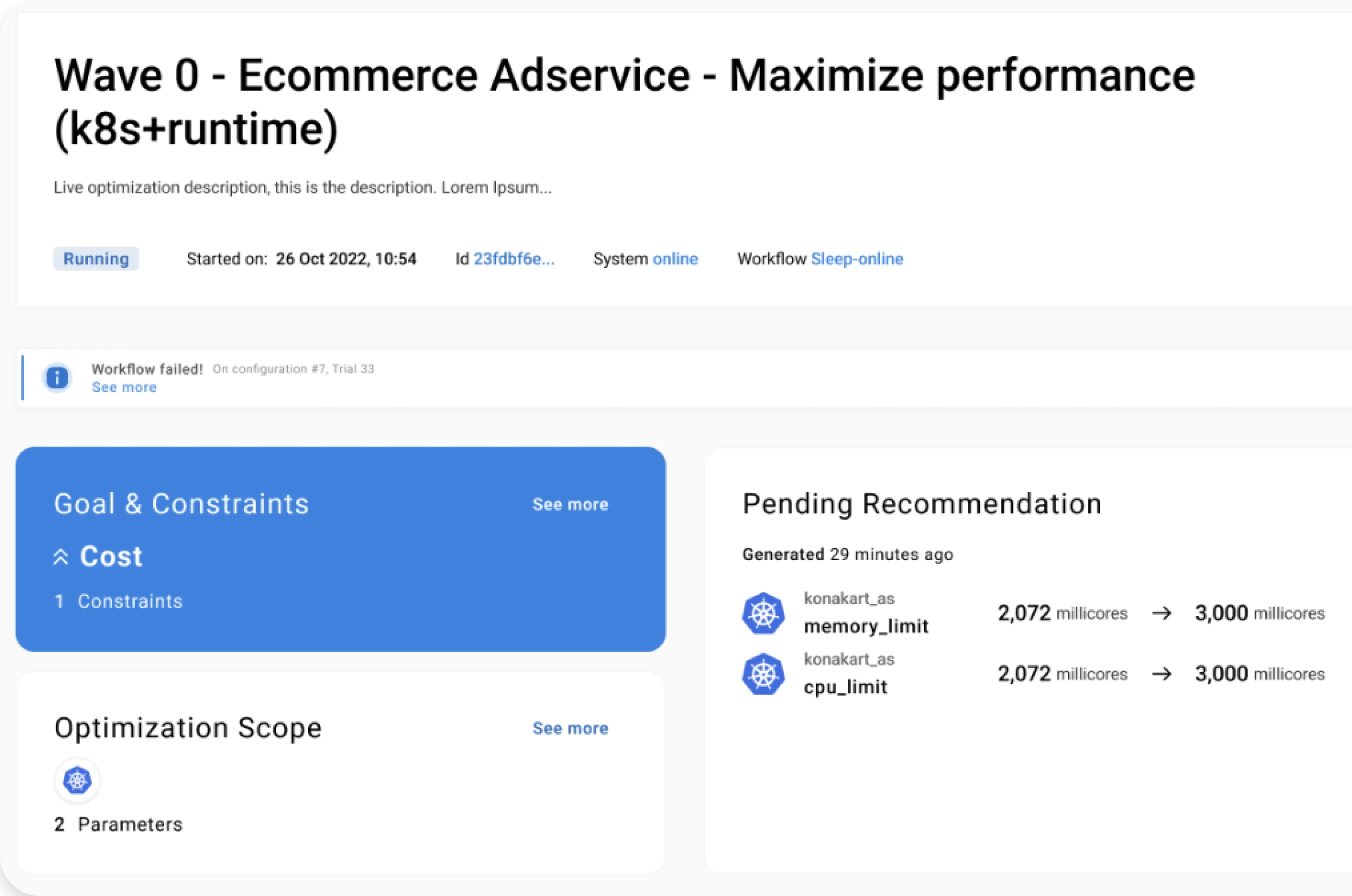

These challenges, opportunities and risks are driving the adoption of AI-powered tools. At Akamas, we demonstrated that AI can autonomously identify and recommend configurations based on real-time observations and live optimizations. We are still in a phase where experts prefer to review recommendations (i.e. human-in-the-loop mode) before letting AI autonomously apply any configurations, but that is the general direction.

Can you please elaborate on why Kubernetes applications represent an additional challenge?

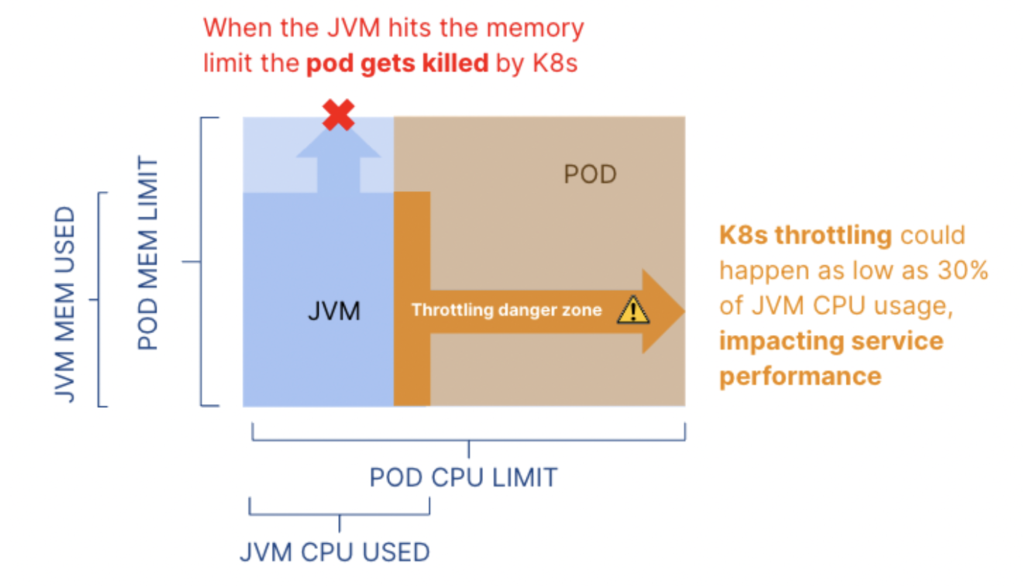

Well, first of all it’s because of the way Kubernetes manages memory and CPU resources. Despite the growing adoption of Kubernetes, these mechanisms are not so well known. But even less known are the so-called ergonomics of application runtimes, such as JVM. The interplay between these mechanisms can dramatically affect cost, performance and availability of applications.

This is why we advocate for an “application-aware” approach for optimizing Kubernetes applications and talk about “AI-powered, autonomous, application-aware optimization“ – which I have to admit is a mouthful, until you realize it can be shortened as “triple A optimization”.

Jokes aside, we worked closely with customers who tried using optimization tools only at the container layer. The problem is that by only considering container size in terms of requests and CPU or memory limits, you can miss significant cost efficiency and performance opportunities. And you can also end up causing under-provisioning and poor end-to-end application performance.

This represents a real dilemma for anyone interested in optimizing Kubernetes applications, until they realize the power of AI-powered optimization.

Can you be more specific? Can you describe the opportunities of autonomous optimization for Kubernetes applications?

Yes, let me be more specific.

Let’s assume the goal is to reduce costs of Kubernetes environments, which are primarily driven by the resource demands of application pods/containers. How can we find the minimal resource settings that do not impact application performance and reliability?

The key thing is to realize that resource consumption of a container is largely driven by the application runtime. For example, in a Java microservice, the memory usage is driven by the JVM which actually allocates and frees memory. And JVM memory usage depends upon the JVM configuration (heap size, GC, etc.). So we routinely see Java microservices with huge memory usage simply because developers assigned 4 GB of mem to all Java microservices as a “best practice”.

A tool that simply seeks to adapt pod resource requests and limits to the observed resource usage, is going to leave quite a bit of potential cost savings on the table. That’s why it’s key to optimize both runtime and Kubernetes resource settings – together. And this applies also to any runtime-based languages like Golang, Node.js and .NET.

Interesting. And what about the potential risks driving the adoption of autonomous optimization for Kubernetes applications?

The risk here is related to how to avoid impacting application performance and reliability.

As we mentioned, the way Kubernetes manages resources is pretty tricky. We often see DevOps teams being surprised as to why Kubernetes is slowing app performance due to CPU throttling even if CPU usage is low. And because application runtimes kind of self-configure themselves when running in a container, we have also seen a lot of strange behaviors, such as the JVM autonomously deciding to change GC and memory pools size depending upon the container memory/CPU requests/limits.

Why does that matter? Because if your approach to cutting costs is simply based on reducing CPU/mem resources, you are in for some surprises. your application might slow down (or crash) in weird ways, when you least expect it.

Which brings us to our next question. What are the top capabilities to consider when choosing a tool to optimize Kubernetes applications?

Based on my experience with customer environments, my top three required capabilities are: 1) ability to define goals and constraints (such as SLOs), 2) ability to operate full-stack, in particular to incorporate both the application runtime and container layers and 3) safety.

First of all, different optimization goals are required for different applications, and also for the same application, at different times. Today the goal might be to make my application capable of processing more orders, while tomorrow it might be to save costs, while ensuring response time SLOs.

Tools that only support pre-defined optimization goals, such as cost reduction, and that do not allow users to specify custom optimization functions and metrics, are not suitable for the real-world.

Similarly inadequate are tools that only provide indicators of how SLOs have been impacted only after changes have been applied, without taking them into account when looking for the optimal configuration.

Second. Ideally, recommended configurations include parameters at the different layers of the application and the underlying infrastructure. As we see again and again, for Kubernetes microservices applications, it is imperative that both the runtime and container layers are considered.

Let me re-emphasize this point: this is not just to take advantage of opportunities to improve cost efficiency and performance, but to prevent major availability and performance risks. So, customers should be very careful when selecting tools that only focus on the infrastructure or operate only at the container level.

Lastly, safety is a major topic as when you are optimizing applications in a production environment, with dynamically varying workloads and unexpected issues, safety is not a nice-to-have. This is a major research area for us, which we started working on quite some time ago, and that led us to introduce safety policy features such as gradual optimization, smart constraints and outlier detection. For those interested, we have some materials on our website.

Can you tell us more about your vision for the role of AI-powered optimization in the future of Kubernetes?

At Akamas we have been following the gradual emergence of Kubernetes as the de-facto standard platform for cloud-native applications and we see interesting similarities with the technology disruption brought by VMware technologies for virtualization.

While the Kubernetes technology is evolving and its adoption is growing fast, there is also an urgent need for more sophisticated automation and control mechanisms.

Kubernetes native scaling mechanisms, HPA (Horizontal Pod Scaling) and VPA (Vertical Pod Scaling), in addition to having different maturity levels, suffer from several limitations and cannot be used in combination. The most critical limitation is represented by the fact that autoscaling mechanisms are only governed by resource thresholds that do not take into account any retroactive feedback from measured application performance. Moreover, autoscalers cannot take into account how the application runtime manages its resources. Finally, static auto-scaling settings may not always be adequate under varying workloads. Thus, service SLOs may be negatively impacted.

This situation resembles what we have seen about 20 years ago when the first automated resource management solutions were introduced to replace VMware native DRS (Distributed Resource Scheduling) mechanisms. We believe that now the capabilities of AI-powered autonomous optimization in order to maximize cost efficiency of Kubernetes applications while also ensuring service quality, at any point in time.

At Akamas, we have validated our “smart auto-scaling” approach that replaces VPA while also making HPA more effective by dynamically adjusting both pod resource requests and HPA autoscaling thresholds. We believe this represents a killer feature that will make AI-powered optimization a mandatory element of any Kubernetes environment running critical applications.

Thanks Stefano. It was really nice talking to you. Would you like to recommend any additional materials or leave a final message?

Thanks for having me here and thanks for all your good questions.

There are several interesting resources on these topics. First, let me mention a couple of my own blog entries to Kubernetes resource management and CPU throttling and JVM Garbage Collectors types. I would also mention “Containerize your Java applications” by Microsoft to understand JVM default ergonomics and the 2022 report on the State of the Java Ecosystem by New Relic that provides interesting statistics about millions of Java apps observed, in particular when running in Kubernetes containers.

And of course, I hope to meet you all at KubeCon in Detroit – please come and visit our booth there – I would be pleased to meet you all in person.

…

To learn more about Kubernetes optimization with Akamas, visit the Akamas Kubernetes Optimization solution page and the Akamas resource center.

Curious about Akamas?

Get a demo.

Learn how it works in 20 minutes. No strings attached, no commitments.

Reading Time:

9 minutes

Author:

Stefano Doni

Co-founder & CTO

Stay up to date!

Related resources

See for yourself.

Experience the benefits of Akamas autonomous optimization.

No overselling, no strings attached, no commitments.

© 2024 Akamas S.p.A. All rights reserved. – Via Schiaffino, 11 – 20158 Milan, Italy – P.IVA / VAT: 10584850969