Efficiency is one of Kubernetes’ top benefits, yet companies adopting Kubernetes often experience high infrastructure costs and performance issues, with applications failing to match latency SLOs. Even for experienced Performance Engineers and SREs, sizing of resource requests and limits to ensure application SLOs can be a real challenge due to the complexity of Kubernetes resource management mechanisms.

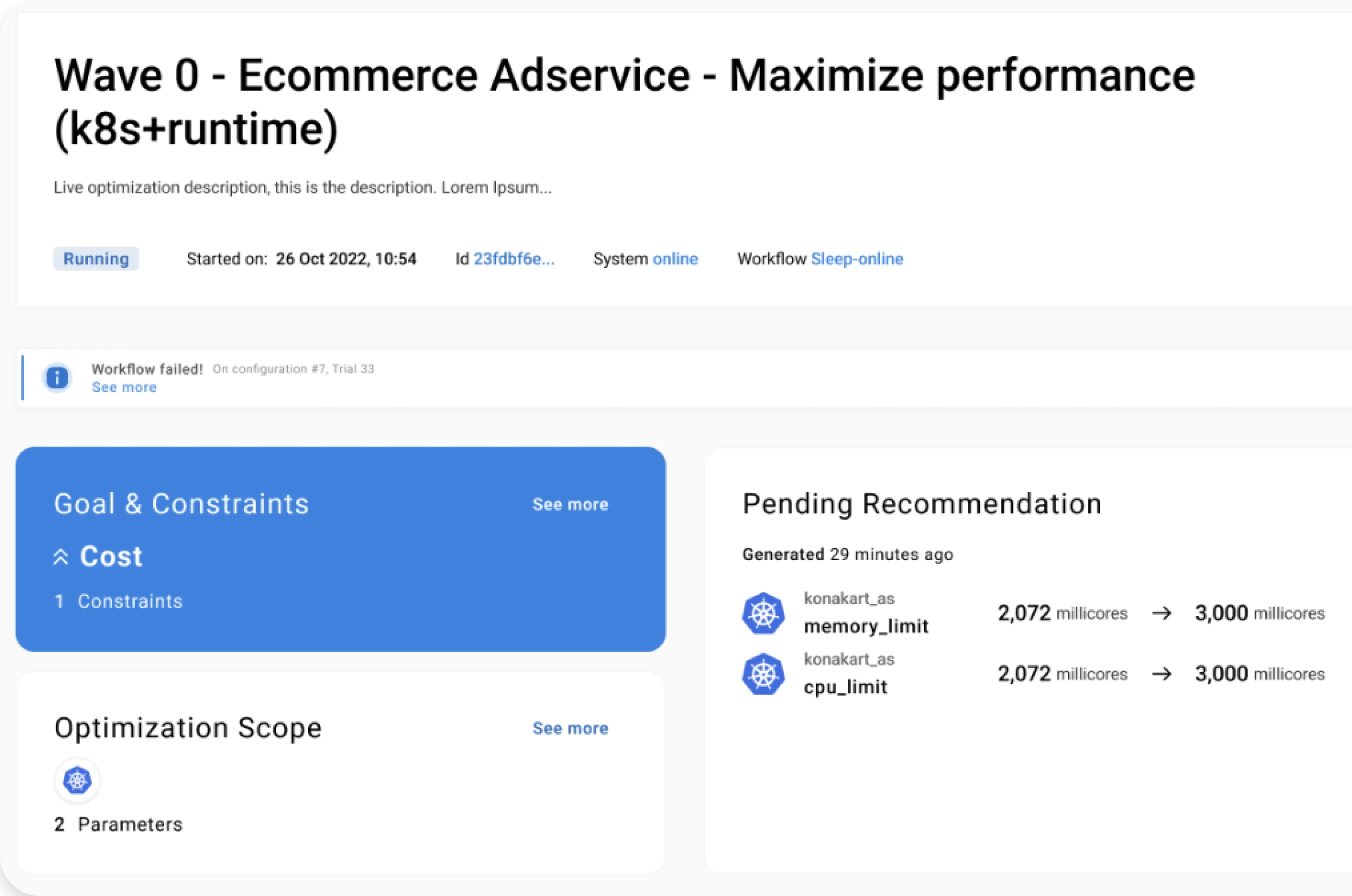

In this talk at Conf42 SRE on 2021, September 30th, Stefano Doni (CTO Akamas) describes how AI techniques help optimize Kubernetes and match SLOs. During his session, Stefano discussed how to apply Akamas ML-based approach to Kubernetes microservices application with the goal of improving cost efficiency and latency by automatically tuning container sizing and JVM options. He demonstrated how this approach allows SREs to achieve higher application performance in days instead of months, by simply defining goals and constraints for each specific service (e.g. minimize resource footprint while matching latency and throughput SLOs) and letting ML-based optimization to find the best configuration.